In this age of AI abundance, we need more playful curiosity.

As the train of progress passes, don't be the person screaming at the smoke.

When a master of a form speaks, we owe it to ourselves to listen — not because everything they say is the only way, but because they did find a way. And behind that leading locomotive of results are all the freight cars of progress, the process that we can infer and glean, but only if we pay attention.

Let me contrast and compare.

Billy Ray’s problem is one of ignorance. He’s staunchly anti-AI. (My positions within the whole domain are mixed, because hey, subtlety and nuance amirite?) While I’ve enjoyed some of his artistry a great deal, he’s missing out by not trying AI writing tools for himself. Maybe then, he’d feel less fearful (which is the most primal human reaction), and in a better position to understand its limitations — rather than speaking at a distance, talking past others, and ironically (for someone who writes so well) not really understanding what he’s against… which smacks of illiteracy.

Ray repeatedly “hears” things about AI, but that’s not firsthand knowledge. That sounds more like rumormongering. I come across “antis” on Reddit who claim “I don’t need to try it to hate it” which only a few steps removed from other prejudice — but why resist, when the barrier to entry is so low (free)?

I’ve tried AI tools that were so badly hyped up, laughable pieces of junk! I’ve dabbled in others I really had no ongoing use for. But every now and then, one will scratch an itch, ignite a spark, and I’ll get curious and play with it — to where it might just find a place in my regular workflows. I believe this to be a lot more productive and progressive than tarring it all with the same brush (again — prejudices).

A notice a commonality with a lot of these uninformed anti-AI views: selfishness and lack of consideration for others’ experiences. More foundationally and important than entertainment, AI helps empower neurodivergent, the elderly, and developing countries — they come to the table at a significant disadvantage, and are interested in bridging the gap. Someone who’s already established and successful can attack AI, because they don’t need it for accessibility, but that’s a failure on their part to consider broader humanity. It comes from a place of insecurity and dragging down others — the others who need this technology to participate and even be considered equals.

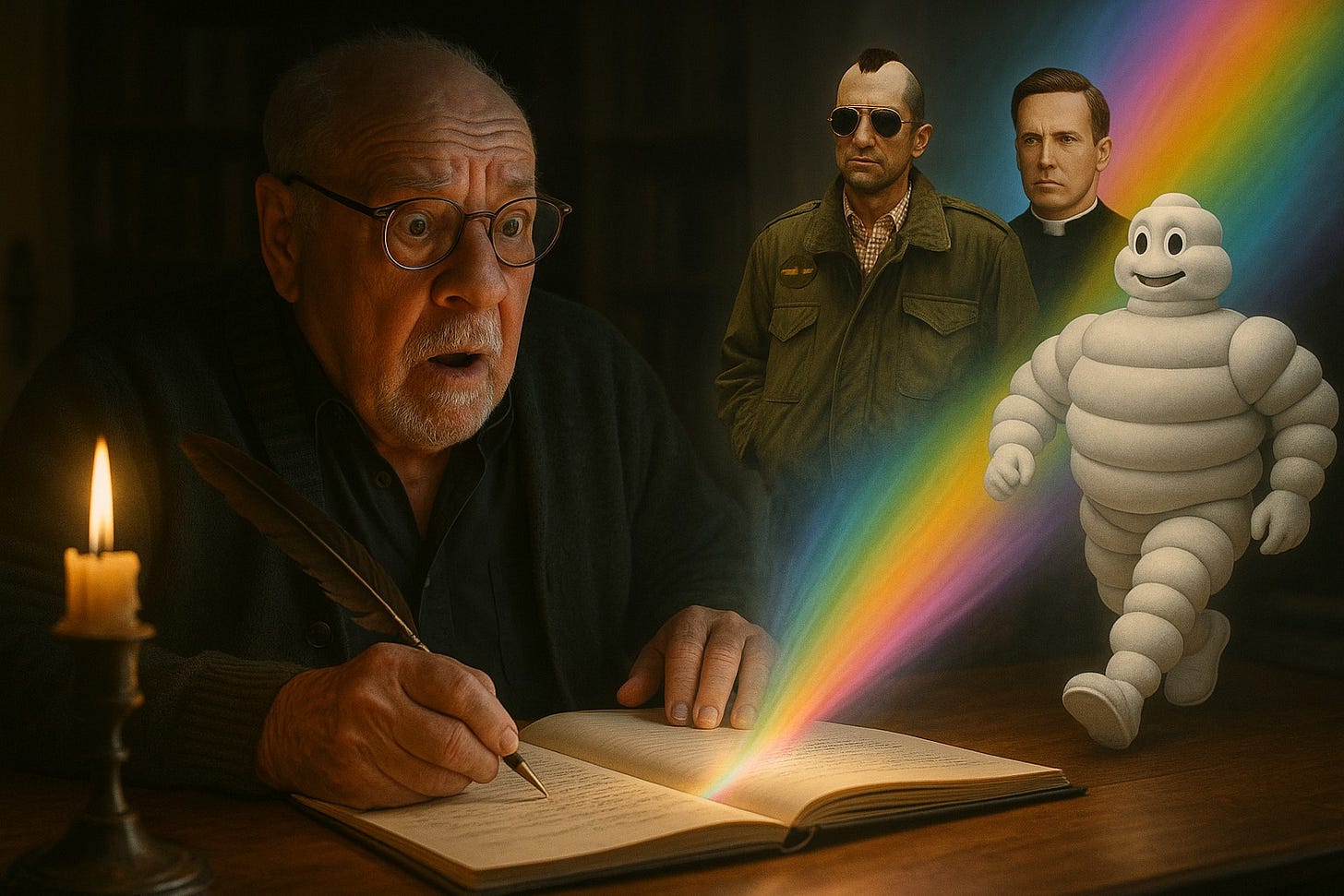

Now, consider Paul Schrader, the legendary screenwriter behind Taxi Driver and others. He’s going about his day, having fun and sharing observations, only to be fired back at with “ARE YOU INSANE!?” and other outraged reactions to his playful curiosity. He posted this image, which some mocked as “Random Michelin Man? WTF, shows AI can’t do things right”.

This ignores a couple key things.

The first thing is serendipity and surprises — say what you will about AI image-gen malformities, but it can introduce possibilities we didn’t consider. And that might lead somewhere a human tugs on further.

The second (which is related to the first) is, to understand Schrader’s views, we must look at how and why he’s created for much of his life. He’s an extreme iterator — he rewrites a LOT and shapes his scripts thru feedback, be it from friends and now AI. It’s a conversation. When he says he’s getting ideas from AI, I interpret that as a rough gem 💎 where he sees potential to iterate to extremes, or it might spark a tangent. But in and of itself, it’s far from the finished work. NOT “one and done”. People come to dismiss AI with the false expectation that it did all the work and went 0➜100, but given Schrader’s history, it makes a lot more sense if he uses AI conversationally, more like a back-and-forth layer-sculpting.

That’s the perspective missing from the conversations I’ve observed.

After all, Schrader is considered a far more thorough reviser than is typical. There’s a blase misunderstanding that AI just “gives you what you want”, rather than introducing other routes along the proverbial train trip. Branching possibilities has always seemed like a basic benefit to me, and climbing up and down the branches is a hallmark of said playful curiosity.

I have some theories on why this gets missed so much.

Let me back up a bit, then continue advancing forward. And also let me acknowledge what I call a preemptive macro — since I feel it gets disclaimed ad infinitum — in the wake of anti-woke, being pro-AI does NOT mean being ignorant of ethics, energy use, and other concerns which are at the heart of being considerate of one another. The dynamic balance between making meaningful progress and being accountable is damn hard. But moving ahead also means some people choose to be left behind, or fight — rather than adapt and collaborate.

I draw connections between the AIphobia in Hollywood and Ari Aster’s Eddington — while that film is political satire about left vs. right and not about AI, he said this:

"That became the center of the culture wars in this country, where you had people arguing for public health and safety, and then you had people arguing for personal freedoms," Aster told Morning Edition host A Martínez. "It's about a bunch of people living in different realities who are unreachable to each other… It pushes them into deeper convictions and paranoia."

Also:

“I wanted to make a film where everybody’s alienated from each other and has lost track of a bigger world outside of themselves. They only see the dimensions of the small world they believe in, and distrust anything that contradicts this small bubble of certainty,” Aster said, citing the social effects of the COVID pandemic. “We’ve all been trained to see the world through certain windows, but those windows have just become stranger and stranger. Look at what’s happened with the internet. It used to be this thing that we went to, but now it’s something we carry on us. We live inside the internet now.”

This is what I’m seeing with AI views, the dangerous extremism where we are NOT learning things from each other. Aster’s own views of AI are fearful (again, human), and acknowledges the potential problems with AI enthusiasts describing it more akin to a religion. Although what he doesn’t include are the cultural differences here, too. For example, in Asia, there’s more enthusiasm about AI because of the collectivist cultures, where if a tool makes society more harmonious (Americans might say “conformist”), then that’s overall a good thing. The individual matters less, and Spock’s words come into focus:

The needs of the many outweigh the needs of the few, or the one.

The friction point here has to do with deeply personal creativity conflicting with one’s ability to care for others, or at the least, see things as they do.

Being willing to improve based on other’s feedback — as Paul Schrader does — is its own flavor of humbleness in acknowledging the bigger picture in every community in the world.

And that’s something only a human can do.